An increasing number of proposed applications on top of Ethereum rely on some kind of incentivized, multi-party data provision – whether voting, random number collection, or other use cases where getting information from multiple parties to increase decentralization is highly desirable, but also where there is a strong risk of collusion. A RANDAO can certainly provide random numbers with much higher cryptoeconomic security than simple block hashes – and certainly better than deterministic algorithms with publicly knowable seeds, but it is not infinitely collusion-proof: if 100% of participants in a RANDAO collude with each other, they can set the result to whatever they want. A much more controversial example is the prediction market Augur, where decentralized event reporting relies on a highly advanced version of a Schelling scheme, where everyone votes on the result and everyone in the majority gets rewarded. The theory is that if you expect everyone else to be honest, your incentive is also to be honest to be in the majority, and so honesty is a stable equilibrium; the problem is, however, that is more than 50% of the participants collude, the system breaks.

The fact that Augur has an independent token provides a partial defense against this problem: if the voters collude, then the value of Augur’s token can be expected to decrease to near-zero as the system becomes perceived as useless and unreliable, and so the colluders lose a large amount of value. However, it is certainly not a total defense. Paul Sztorc’s Truthcoin (and also Augur) includes a further defense, which is quite economically clever. The core mechanism is simple: rather than simply awarding a static amount to everyone in the majority, the amount awarded depends on the level of disagreement among the final votes, and the more disagreement there is the more majority voters get, and minority voters get an equally large amount taken out of their security deposit.

The intent is simple: if you get a message from someone saying “hey, I am starting a collusion; even though the actual answer is A, let’s all vote B”, in a simpler scheme you may be inclined to go along. In Sztorc’s scheme, however, you may well come to the conclusion that this individual is actually going to vote A, and is trying to convince only a few percent of people to vote B, so as to steal some of their money. Hence, it creates a lack of trust, making collusions harder. However, there is a problem: precisely because blockchains are such excellent devices for cryptographically secure agreements and coordination, it’s very hard to make it impossible to collude provably.

To see how, consider the simplest possible scheme for how reporting votes in Augur might work: there is a period during which everyone can send a transaction supplying their vote, and at the end the algorithm calculates the result. However, this approach is fatally flawed: it creates an incentive for people to wait as long as possible to see what all the other players’ answers are before answering themselves. Taking this to its natural equilibrium, we would have everyone voting in the last possible block, leading to the miner of the last block essentially controlling everything. A scheme where the end comes randomly (eg. the first block that passes 100x the usual difficulty threshold) mitigates this somewhat, but still leaves a great amount of power in the hands of individual miners.

The standard cryptographer’s response to this problem is the hash-commit-reveal scheme: every player P[i] determines their response R[i], and there is a period during which everyone must submit h(R[i]) where h can be any pre-specified hash function (eg. SHA3). After that, everyone must submit R[i], and the values are checked against the previously provided hashes. For two-player rock paper scissors, or any other game which is purely zero-sum, this works great. For Augur, however, it still leaves open the opportunity for credible collusion: users can voluntarily reveal R[i] before the fact, and others can check that this indeed matches the hash values that they provided to the chain. Allowing users to change their hashes before the hash submitting period runs out does nothing; users can always lock up a large amount of money in a specially crafted contract that only releases it if no one provides a Merkle tree proof to the contract, culminating with a previous blockhash, showing that the vote was changed, thereby committing not to change their vote.

A New Solution?

However, there is also another path to solving this problem, one that has not yet been adequately explored. The idea is this: instead of making pre-revelation for collusion purposes costly within the primary game itself, we introduce a parallel game (albeit a mandatory one, backed by the oracle participants’ security deposits) where anyone who pre-reveals any information about their vote to anyone else opens themselves up to the risk of being (probabilistically) betrayed, without any way to prove that it was that specific person who betrayed them.

The game, in its most basic form, works as follows. Suppose that there is a decentralized random number generation scheme where users must all flip a coin and supply either 0 or 1 as inputs. Now, suppose that we want to disincentivize collusion. What we do is simple: we allow anyone to register a bet against any player in the system (note the use of “anyone” and “any player”; non-players can join as long as they supply the security deposit), essentially stating “I am confident that this person will vote X with more than 1/2 probability”, where X can be 0 or 1. The rules of the bet are simply that if the target supplies X as their input then N coins are transferred from them to the bettor, and if the target supplies the other value then N coins are transferred from the bettor to the target. Bets can be made in an intermediate phase between commitment and revelation.

Probabilistically speaking, any provision of information to any other party is now potentially extremely costly; even if you convince someone else that you will vote 1 with 51% probability, they can still take coins from you probabilistically, and they will win out in the long run as such a scheme gets repeated. Note that the other party can bet anonymously, and so can always pretend that it was a passerby gambler making the bets, and not them. To enhance the scheme further, we can say that you must bet against N different players at the same time, and the players must be pseudorandomly selected from a seed; if you want to target a specific player, you can do so by trying different seeds until you get your desired target alongside a few others, but there will always be at least some plausible deniability. Another possible enhancement, though one which has its costs, is to require players to only register their bets between commitment and revelation, only revealing and executing the bets long after many rounds of the game have taken place (we assume that there is a long period before security deposits can be taken out for this to work).

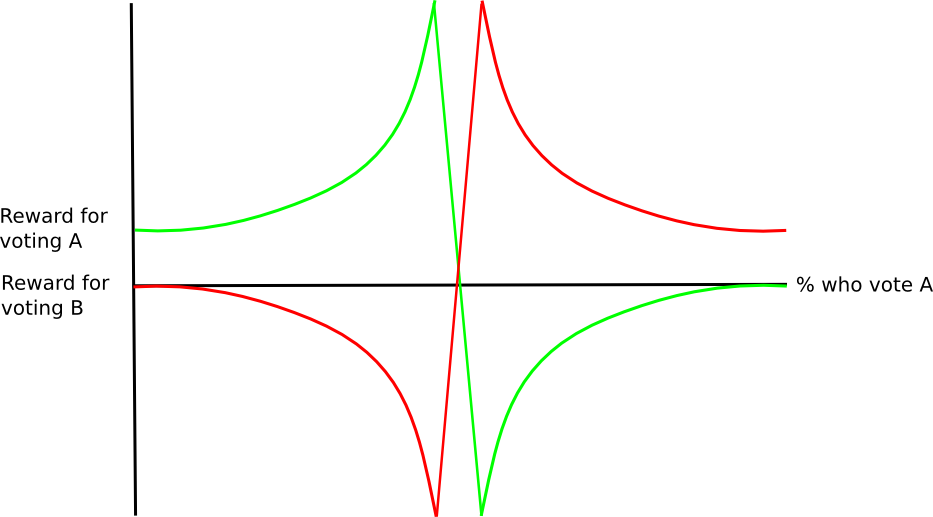

Now, how do we convert this into the oracle scenario? Consider once again the simple binary case: users report either A or B, and some portion P, unknown before the end of the process, will report A and the remaining 1-P will report B. Here, we change the scheme somewhat: the bets now say “I am confident that this person will vote X with more than P probability”. Note that the language of the bet should not be taken to imply knowledge of P; rather, it implies an opinion that, whatever the probability a random user will vote X is, the one particular user that the bettor is targeting will vote X with higher probability than that. The rules of the bet, processed after the voting phase, are that if the target votes X then N * (1 – P) coins are transferred from the target to the bettor, and otherwise N * P coins are transferred from the bettor to the target.

Note that, in the normal case, profit here is even more guaranteed than it is in the binary RANDAO example above: most of the time, if A is the truth, everyone votes for A, so the bets would be very low-risk profit grabs even if complex zero-knowledge-proof protocols were used to only give probabilistic assurance that they will vote for a particular value.

Side technical note: if there are only two possibilities, then why can’t you determine R[i] from h(R[i]) just by trying both options? The answer is that users are actually publishing h(R[i], n) and (R[i], n) for some large random nonce n that will get discarded, so there is too much space to enumerate.

As another point, note that this scheme is in a sense a superset of Paul Sztorc’s counter-coordination scheme described above: if someone convinces someone else to falsely vote B when the real answer is A, then they can bet against them with this information secretly. Particularly, profiting from others’ moral turpitude would now be no longer a public good, but rather a private good: an attacker that tricks someone else into a false collusion could gain 100% of the profit, so there would be even more suspicion to join a collusion that’s not cryptographically provable.

Now, how does this work in the linear case? Suppose that users are voting on the BTC/USD price, so they need to supply not a choice between A and B, but rather a scalar value. The lazy solution is simply to apply the binary approach in parallel to every binary digit of the price; an alternative solution, however, is range betting. Users can make bets of the form “I am confident that this person will vote between X and Y with higher probability than the average person”; in this way, revealing even roughly what value you are going to be voting to anyone else is likely to be costly.

Problems

What are the weaknesses of the scheme? Perhaps the largest one is that it opens up an opportunity to “second-order grief” other players: although one cannot, in expectation, force other players to lose money to this scheme, one can certainly expose them to risk by betting against them. Hence, it may open up opportunities for blackmail: “do what I want or I’ll force you to gamble with me”. That said, this attack does come at the cost of the attacker themselves being subjected to risk.

The simplest way to mitigate this is to limit the amount that can be gambled, and perhaps even limit it in proportion to how much is bet. That is, if P = 0.1, allow bets up to $1 saying “I am confident that this person will vote X with more than 0.11 probability”, bets up to $2 saying “I am confident that this person will vote X with more than 0.12 probability”, etc (mathematically advanced users may note that devices like logarithmic market scoring rules are good ways of efficiently implementing this functionality); in this case, the amount of money you can extract from someone will be quadratically proportional to the level of private information that you have, and performing large amounts of griefing is in the long run guaranteed to cost the attacker money, and not just risk.

The second is that if users are known to be using multiple particular sources of information, particularly on more subjective questions like “vote on the price of token A / token B” and not just binary events, then those users will be exploitable; for example, if you know that some users have a history of listening to Bitstamp and some to Bitfinex to get their vote information, then as soon as you get the latest feeds from both exchanges you can probabilistically extract some amount of money from a participant based on your estimation of which exchange they are listening to. Hence, it remains a research problem to see exactly how users would respond in that case.

Note that such events are a complicated issue in any case; failure modes such as everyone centralizing on one particular exchange are very likely to arise even in simple Sztorcian schemes without this kind of probabilistic griefing. Perhaps a multi-layered scheme with a second-layer “appeals court” of voting at the top that is invoked so rarely that the centralization effects never end up taking place may mitigate the problem, but it remains a highly empirical question.